- Published on 30 Oct 2018

- External News

Powerful image processing in a compact package: The TULIPP project

At first glance, advanced driver-assistance systems (ADAS), unmanned aircraft vehicles (UAVs) and medical X-ray imaging have little in common. That’s if you’re looking at them from the user’s point of view. However, take a closer look at these systems from the developer’s point of view, and you will notice that they all need high-performance image processing and they all have the so-called SWaP (size, weight and power) constraints that are common for every embedded computing system.

That’s how the Tulipp project started. We took a range of application domains as a basis for the design of a common reference processing platform (i.e. the hardware, the operating system and its programming environment) that captures the real-time requirements, high-performance image processing and vision applications common to all of them. We created a set of guidelines to help select combinations of computing and communication resources to be instantiated in the platform while minimizing energy resources and reducing development costs and time-to-market. These are based on existing standards, combined to optimize the performance / energy-consumption ratio.

Designing the Tulipp reference platform

The key insight of the Tulipp project is that industrial embedded image processing application development can be captured by an abstraction known as the generic development process (GDP). The input to GDP is a functionally correct implementation of the image processing system that runs on a desktop computer; the application is then moved onto the embedded platform. The first step is to partition the application to leverage the accelerators — such as graphics processing units (GPUs) — or reconfigurable fabrics — such as field programmable gate arrays (FPGAs) — commonly available on high-performance embedded systems-on-chip (SoCs).

Second, the implementations of the parts of the application designated for each accelerator are adapted to meet the accelerator’s requirements and characteristics (for example, adapt a new programming model to expose parallelism). Third, the accelerated components are integrated and functional correctness is verified. Finally, the key performance indicators of interest — such as frame rate, power consumption or energy consumption — are retrieved and assessed. If the system requirements are not met, development is restarted at the partitioning or accelerator implementation steps. In summary, GDP is an iterative process where each iteration (hopefully) brings the image processing system closer to meeting system requirements.

The major goal of the developments within the Tulipp project is to minimize the number of iterations through the GDP, and our approach was to design a reference platform consisting of high-performance, low-power hardware, a real-time operating system, and productivity-enhancing utilities — a framework that efficiently supports the developer when carrying out the GDP.

To achieve this, a consortium of eight partners (Thales, Sundance, HIPPEROS, NTNU, Fraunhofer, Synective Labs, TUD and Efficient Innovation) joined forces to develop the Tulipp reference platform and make it publicly available. We decided to focus on three industrially relevant use cases covering three different domains: pedestrian detection (ADAS), depth map computation using stereo cameras (UAV), and medical X-ray image enhancement (medical).

However, it would not be sufficient to simply design a platform in 2018 and to call it a reference platform, given the rapid pace at which technology improves. It is crucial that the reference platform is created at a level of abstraction where it can accommodate technology improvements. We’ve achieved this by documenting the process of designing the platform within a book that will form the basis for future standardization efforts. In addition, the insights of our experts have been captured in a set of publicly available guidelines, consisting of practical advices, best practice approaches and recommended implementation methods that help developers select the optimal implementation strategy for their own applications.

In accordance with the GDP and our Tulipp guidelines, we’ve also produced a development kit consisting of:

- a Tulipp hardware platform developed by Sundance, our hardware platform provider, which is based on the Xilinx Zynq multi-processor systems on chip (MPSoCs) Ultrascale+™

- the Tulipp operating system, based on the HIPPEROS multi-core operating system

- STHEM, the Tulipp toolchain, which was designed by NTNU and TUD

This development kit is available as a bundle and can be purchased from Sundance. It will also be provided for free to attendees of the tutorial session organized as part of a workshop on low-power high-performance embedded solutions at the HiPEAC conference in January 2019 in Valencia.

Along with the FinFET+ FPGA, the Xilinx Zynq Ultrascale+™ MPSoC contains an Arm Cortex™-A53 quad-core CPU, an Arm Mali™-400 MP2 Graphics Processing Unit (GPU), and a real-time processing unit (RPU) containing a dual-core Arm Cortex™-R5 (32-bit real-time processor based on Arm-v7R architecture). The MPSoC implements many different interfaces, some of which can be used both as external and internal. The versatility of such components helps the system adapt to legacy interfaces and allows the user to select the most suitable chip for their application. However, SoCs and MPSoCs based on FPGAs are often available with different sizes of the reconfigurable matrix. This forces the board manufacturers to build as many board types as the number of available chips, which is extremely expensive.

The solution to this problem is often to solder such chips on a smaller board called a module with standardized interfaces. This allows the board manufacturer to develop carrier boards with different kinds of input and output interface while keeping the same interfaces with the processing module. When developing a new application, system developers can create their own configuration by selecting the carrier board that covers their application needs and the processing element that copes with the processing requirements. This approach is also good for compatibility and standardization because it allows the processor to evolve while keeping the same interface with the carrier board. In addition, this approach also allows control of the components around the processor and helps ensure that the whole system is more stable.

The Tulipp hardware platform follows the embedded computer standards PC/104. They are intended for specialized environments where a small, rugged computer system is required and define both form factors and computer buses. The standard allows customers to build a modular customized embedded system by stacking together boards from a variety of commercial off-the-shelf (COTS) manufacturers.

Unfortunately, it is not sufficient to choose the best possible high-performance embedded vision hardware, along with a dedicated and optimized real-time operating system. In order to fully exploit a heterogeneous platform such as the Zynq Ultrascale+, application functions have to be mapped and scheduled on the available resources and accelerators. To help application designers understand the impact of their mapping choices, the Tulipp reference platform has been extended with performance analysis and power measurement features. Specifically, NTNU and TUD developed the STHEM toolchain, consisting of the following generic functions that complement and refine the capabilities of existing platforms for embedded vision applications:

- a novel power measurement and analysis function

- a platform-optimized image processing library

- a dynamic partial reconfiguration function

- a function providing support for using the real-time OS HIPPEROS within the Xilinx software development environment SDSoC™

The main feature of STHEM is the ability to non-intrusively profile power consumption and processor program counter values and thereby directly attribute energy consumption to source code constructs (e.g. for loops and procedures). This is enabled by our custom Lynsyn power measurement unit, which uses the JTAG hardware debug interface to concurrently sample platform power consumption and program counter values. STHEM supports a variety of ways of visualizing these profiles, which aid application developers in identifying power consumption and performance issues.

In addition, STHEM contains a number of other utilities — such as a high-level FPGA library for image processing. This library is highly optimized, parametrizable and includes 28 vision functions, which are based on the OpenVX specification. Due to its implemented structure, the library is straightforward to use, while it is also easily portable as it has no dependencies to other libraries. It uses SDSoC and Vivado high-level synthesis (HLS) directives, which can easily be changed for any other HLS tool. Furthermore, the library is not restricted to any SDSoC version. Most functions support additional data types for higher precision and more flexibility and auto-vectorization for easy-to-use performance optimization. Collectively, these functions facilitate the efficient development of image processing applications on the Tulipp hardware platform.

As stated above, we had three use cases in different application domains in mind when developing our reference platform. The first use case adds a computing board to a medical X-ray sensor, helping to reduce radiation by reducing noise in the images. The second use case equips a UAV with real-time obstacle detection and avoidance capabilities based on a lightweight and low-cost stereo camera setup. The third use case enables the implementation of pedestrian detection algorithms running at real time on a small, energy-efficient, embedded platform.

Advance image enhancement algorithms for X-ray images, running at high frame rates

The TULIPP medical use case centres on mobile C-arms, a medical system that displays X-ray views from inside a patient’s body during an operation, greatly enhancing the surgeon’s ability to perform surgery. The system allows the surgeon to target the region much more precisely and to make small incisions rather than large cuts. This leads to faster patient recovery and lower risks of hospital-acquired infection. The drawback of this technique is the radiation dose, which is 30 times higher than we receive from our natural surroundings each day. These high doses of radiation are received not only by the patient but also by the medical staff doing such interventions all day long, several days a week.

Since the sensitivity of the sensor is very high, one could try to reduce the dose of radiation. However, this also increases the noise level on the images, making them almost unreadable. Luckily, this effect can be corrected with proper image processing. Due to the high resolution of X-ray images, this procedure needs a lot of computing resources and, to complicate matters, the system has to meet strong real-time constraints due to the live display function. With specific noise reduction algorithms running on high-end PCs, it is possible to lower the radiation dose and restore the original quality of the picture. Unfortunately, in a confined environment such as an operating room, crowded with staff and equipment, size and mobility are of key importance, making the use of high-end PCs impractical. By bringing the computing power of a PC to hardware with the size of a smart-phone, Tulipp makes it possible to use a quarter of the radiation while maintaining the picture quality.

Real-time obstacle avoidance system for UAVs based on a stereo camera

Despite all the publicity about autonomous drones, most current systems are still remotely piloted by humans. A human on the ground has to constantly monitor both the payload of the drone in order to successfully accomplish the mission (e.g. to capture the desired data) and the drone’s flight in order to avoid collisions with obstacles. The simultaneous operation of the UAV and of the sensor payload is a challenging task. Mistakes can threaten either the success of the mission or — much worse — human safety. Moreover, the UAV’s area of operation may be limited due to the need for a constantly available communication link between the UAV and the remote control station.

A major improvement could be made if UAVs were capable of autonomous navigation, or at least if they had an obstacle detection and avoidance capability. This capability can be achieved by means of additional sensors, such as ultrasonic sensors, radars, laser scanners, or video cameras monitoring the UAV surroundings. However, ultrasonic sensors have a very limited range, radar sensors might “overlook” non-metal objects, while laser scanners are heavy and energy-intensive and thus not suitable for applications with UAVs, which have tight weight and power constraints. Hence, our work within Tulipp focused on obstacle avoidance based on a lightweight stereo camera setup with cameras orientated in the direction of flight.

We use disparity maps, computed from the camera images, to locate obstacles in the flight path and to automatically steer the UAV around them. We used high-level synthesis (HLS) to port the semi-global matching (SGM) disparity estimation algorithm, written in C/C++, to the embedded FPGA, and achieved a frame rate of 29Hz and a processing latency of 28.5ms for images sized 640×360 pixels. The disparity maps are then converted into simpler representations, so-called U-/V-Maps, which are used for obstacle detection. Obstacle avoidance is based on a reactive approach that finds the shortest path around the obstacles as soon as they are within critical distance of the UAV.

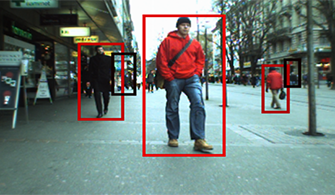

Pedestrian recognition in real time

Nowadays, most advanced driver assistance systems (ADAS) rely on vision systems or on combinations of vision and radar. Modern image processing algorithms are capable of extracting almost all the information required about the vehicle’s surroundings. However, there is often quite a big step between the first prototypical implementation on a desktop PC and the integration into a small and energy-efficient electronic control unit (ECU). In Tulipp we focused on the pedestrian detection application based on Viola-Jones classifiers.

To implement the application on the Tulipp hardware we used SDSoC, which offers a smooth optimization path from the “C” version of the code running on the Arm processors of the Zynq towards FPGA-optimized parts. An ADAS algorithm must of course run at real time, which means it should be able to process a video image stream at full rate (30Hz), or at least at half rate. This algorithm, however, took roughly 10 seconds per frame on an ordinary PC, and almost 30 seconds per frame on the ARM platform, so the required speed-up was significant. The frame size used here is 640x480.

One of the “classical” problems with the Viola-Jones algorithm is its non-sequential memory access pattern of the integral images, and — especially for the FPGA platform — the limited memory space embedded in the FPGA logic. By totally restructuring the computational order, a more efficient access pattern could be used without changing the results. The classifier data, which is different for each scale, is then streamed through the FPGA, and the on-chip memory can then hold all data needed for running one scale at a time.

After these optimizations, the final implementation that uses the FPGA logic in combination with the ARM cores, reached roughly 15Hz, i.e., 66ms processing time per frame. This means that the algorithm may run on every second image if the camera runs at 30Hz.

So what’s next for TULIPP? We’ll be working on bringing even more intelligence to the platform by adding new accelerated technology dedicated to artificial neural network.

By Philippe Millet, Thales

Diana Göhringer, TU Dresden

Michael Grinberg, Igor Tchouchenkov (Fraunhofer IOSB)

Magnus Jahre (Norwegian University of Science and Technology)

Magnus Peterson (Synective Labs AB)

Ben Rodriguez (HIPPEROS SA)

Flemming Christensen (Sundance Multiprocessor Technology Ltd)

Fabien Marty (Efficient Innovation)

TULIPP has been named as a runner-up in the European Commission ECS Innovation Award, presented at the European Forum for Electronic Components and Systems 2018

Interested in finding out more about TULIPP’s work? Don’t miss the workshop at the HiPEAC Conference:

TULIPP: Energy Efficient Embedded Image Processing: Architectures, Tools and Operating Systems

https://www.hipeac.net/2019/valencia/schedule